Investigating Issues in Production

Over the past few years of working with production systems, I have a few war stories of doing Root Cause Analysis(RCA) of production issues under my belt. Each time has been different, but after doing it for several times you begin to see patterns. Based on these patterns, covering the ususal suspects before trying anything out of the box helps solve the issues fast. This article is a list of such usual suspects I go to first before doing anything fancy.

Almost no 2 issues are the same, but a checklist acts as a good starting point for any issue. It not only helps you get into action fast, but also eliminates the basic stuff you can sometimes miss. I use the following steps when debugging an issue in production. I’ve learnt them the hard way after wasting lots of time omitting some of these steps and then coming back to them just to realize they pointed me in the right direction in one go.

These steps are with respect to backend systems, but many of them carry over to other systems(Frontend, DS, etc.) as well. They may seem to have an overlap with RCA process of an incident, but this is more of a technical and practical how-to take at it.

Table Of Contents

Pre-debug Checklist

Having an environment set up and being ready to dive right in the problem saves the trouble of going through frustration of setting one up before even taking a look at the problem.

Make Sure You’ve Checked Out the Deployed Branch/Tag

Seems like a no brainer, but I’ve encountered this multiple times that a bug is fixed in master but is present on the deployed branch and the team spent countless hours on trying to find it in master branch.

Always checkout the branch/tag having the issue before you start investigating the issue.

Have a Working Debugger

A fully working dev environment with a working debugger makes RCAing a breeze. You can move very fast if you have it set up.

Without a debugger, you need to run the whole process end-to-end, and then play a guessing game based on the logs and state changes made by the program. With a debugger, each time you want to reassess the code, you can stop at the exact line of codes where you suspect the issue to be. It helps you check the data before and after each line of code minimizing the surface area you need to look at and helping you cut down.

Almost all languages have their own set of debuggers you can use. I generally prefer an GUI based debugger over a CLI based one as it gives you a dashboard with all the information at a given breakpoint.

Minimize the Differences between Dev and Prod Environments

I’ve failed to reproduce a lot of issues from Prod env to Dev env because of not having an exact replica of the system on my local.

Recently, I spent almost a day trying to find a thread exhaustion bug in my code. The bug was an issue caused by creating a new connection to Kafka on every request, and I had a flag to not connect to or write data to kafka on my local instance.

These kinds of issues generally happens in cases where the issue lies in communicating with an external entity that doesn’t belong in the service you’re debugging. It could be a Queue, DB, Internal Service or a vendor service.

Whatever be the case, to avoid being clueless, try to get your local environment to mimic production as closely as possible. It might not be possible or feasible to do this in every case though(Eg. Payment providers).

Note down the issue case and the happy case

This is generally a good starting point for the investigation. It lists down the problem and the expected state of the system. If you have both cases written down, you can refer it without getting confused by data you’ll go through during the investigation. Also at each point you can ask yourself, is this something that could’ve caused the noted issue.

Debugging the Issue

Once you have the environment ready to go, you can start diving into the actual issue. Along side the following steps, keep following the RCA process of an incident.

Reproduce the Issue

I can’t stress this enough. This is the single most important step for understanding any issue. There is only so much you can conclude about an issue from looking at it as a black box and cross verifying the execution with the code.

It might not be possible to reproduce the issue in some cases where the input variables are out of your control which causes the issue to show up intermittently. But in rest of the cases this should be the first step you should do.

Once you have a way to reproduce the issue and a debugger at your command, you can turn your focus on:

- Identifying suspected problematic lines of code

- Adding breakpoints on them

- Checking the state before and after those lines of code are executed

These steps when repeated over all areas of code where you suspect the issue to be would help you identify the one with the issue.

Don’t Do the Same Thing Again without Making Changes

Insanity is doing the same thing over and over and expecting a different result – Albert Einstein

Okay maybe that heading and quote was a bit far fetched.

But each time you are running the broken code path, take notes, try to zero in on the suspected areas, if possible/required make changes to suspected code and rerun. This way you can avoid repetition and expecting a different outcome without any changes.

This goes both ways, when you’re trying to reproduce an error(need to change inputs in case you cannot reproduce it) and when you’re trying to fix the problem(change code in case current change doesn’t work.)

Take this with a grain of salt as this could be bad advice in bugs that involve random numbers or time. Basically, don’t apply this whenever the input variables are out of your control and can change arbitrarily.

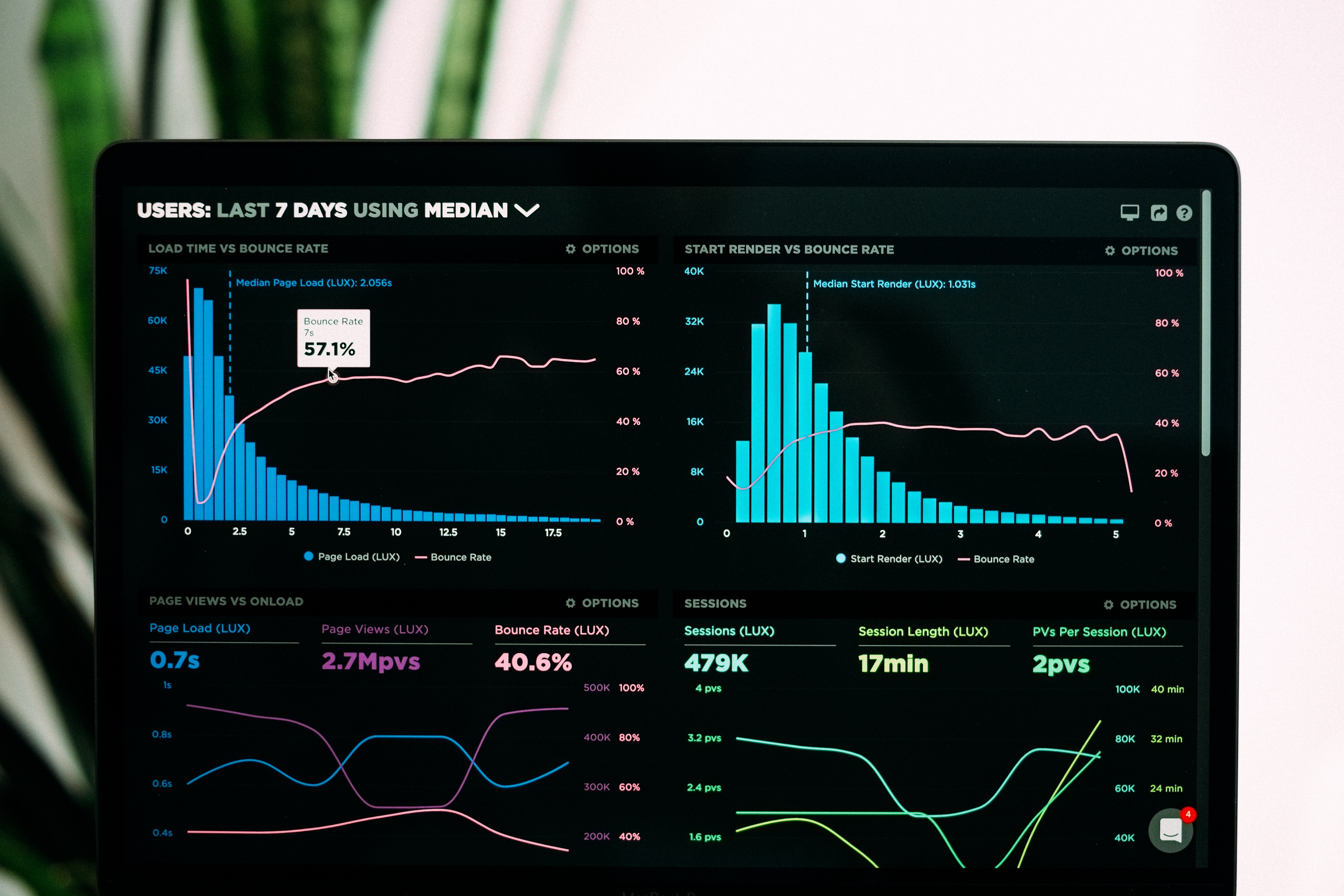

Check your Monitoring Tools

Having a dashboard which gives you a glance of vitals of your service is a huge advantage and can make identifying certain types of errors trivial. Errors like Memory leaks, thread exhaustion, high CPU utilization can all be caught easily this way.

For example, if you see memory utilization just go up and up with each request your service receives and never come down even when the request is completed, you can be almost(yes its always almost, never for sure) be certain that you have a memory leak associated with that endpoint. You can then use the same graphing tools to zero in on the endpoint that could be causing this by correlating request count with memory usage.

Though it sounds a bit trivial, many application health issues similar to this can be caught using monitoring tools alone. Try using them more when dealing with any issues related to overall health of your application.

Deep dive into the logs

Logs are where the details are, they’re closest to the code. If you have proper logging in place, logs can alone be used to investigate and solve plenty of issues.

If your organization uses distributed tracing and distributed logging leverage it to quickly cut down your search space and figure out the problematic area. Get the trace ID and search your aggregated logs for it. Once you have the entire request flow in front of you, it becomes easier to zero in on the problematic service/area of code. Many logging tools also provide advanced pattern based search on time ranges which can be used to filter out exactly what you need.

In order for this point to work, you need a right balance of logs in your application. Log too little and you’ll not have context, log too much and you’ll have information overload. In order to maintain this balance, each time you’re debugging something and looking at the logs, ask yourself if a certain log was helpful or just noise, in case of latter, get rid of it. Same thing goes other way as well, in case you feel logs are lacking some vital info, add that log for future.

For a comprehensive guide on what to log and what not to, do check out The 10 commandments of logging.

Minimize the Search Space

This is already covered in earlier parts. The main point is to cut down your search space and arrive at the suspected parts of code ASAP without wasting time on other stuff. Logging, Monitoring, Reproducing the issue, etc. all help with this.

Always deploy using tags in your VCS. It helps you see every change between last working deployment and current deployment and focus on those lines of code.

Keep notes

A lot of bug hunting is like investigative detective work. To avoid going in circles and repeating things that you’ve already tried, you must keep notes.

Keeping notes makes you consciously think about the last try you did, have a record of all things you’ve tried and also makes it super easy in case you need to hand over the RCA to another person/team.

They also help you when you need to write up a Postmortem of an incident.

Get Another Set of Eyes

Sometimes you work on some issues so much that you take some things for granted. Get a coworker, give them enough context about the problem and a summary of what all you’ve tried and work with them to figure out the problem.

Walk away from the problem for some time

Walk Away From Your Computer. Seriously.

Sometimes it’s the Infra Teams

At many companies infra teams are the one’s that manage all of deployment(Cloud provider accounts, VPCs, network configs, etc) and development infrastructure(Managed git repos, CI tools, etc.). Sorry for taking a jibe at infra teams, but sometimes the infra level changes that they make can cause issues in you applications. These changes can include protocol changes, capacity changes, DB version changes, DNS entry changes, etc. among other things. Whenever you try to debug issues that seem to pop up out of nowhere and you remember seeing a mail from infra team about a DB version upgrade, just check with them once.

Post Debug Checklist

Once you’re done with debugging, it is important to let the team know the process you followed and follow ups planned for the same.

Share your RCA Process

Sharing your RCA process summary helps everyone on the team gain knowledge about the issue, the codebase and the dos and don’ts of debugging. What you might think was a simple step could be something that most people didn’t know about on the team and it could be invaluable experience for them.

Write Down the Chosen Solution and the Why behind it

In many cases, there can be multiple ways to deal with the broken code path. If most of them require significant code changes, writing down the what and why behind it can be helpful so that in future if anyone has questions about it they can refer to your write up.

Update Your Test Suite

If possible, add a test case for the issue, so that it can be avoided in future. Again this might not be possible in some cases where the issue was caused by an external dependency(either not worth the effort or just not possible to test.)

Caveats

Many of these things are not applicable in many different scenarios, but still can be a good starting point. In issues like network partitions in distributed systems, etc. you mostly rely on logs, monitoring tools and your detective skills only come to a root cause and possible mitigations for the same.

Outro

Although these are some techniques I use in prod to do RCA and debug issues, in case you want to learn more about dealing with such issues and eliminating most of them before your code hits prod, be sure to check out a free book on Site Reliability Engineering by Google. I’ve learn’t a huge deal from this book.

Share your thoughts below, or if you think I can improve this checklist, add the point below and I’ll try to incorporate the same here!